Get Rid of Half Your Stuff

I started selling off my belongings in order to pay for a recent vacation to Alaska. At first I was kind of worried about getting rid of my stuff. All my old video games for 50 bucks. Thousands of hours were invested there. Old TV’s, bags of clothes, my dining room table, mirrors, furniture, bike racks, beds… all gone. To be honest I’ve never felt better. I know the only things I have are the things I really value. It feels great. So if you want to feel great sell half your stuff. Seriously. And take a vacation with the money you earned doing it!

SQL Full Text Query Across Multiple Tables

Part of my job is updating and maintaining some legacy web applications. Most of the applications were written back when our network consisted of 6 laptops, and we never imagined we would grow so big. For this reason most of the applications were not designed with scalability in mind. This is an example of just such a oversight, where an SQL query seemingly worked fine for 200 records, but now that we have 10,000 we are seeing some serious issues.

Lately, people have been complaining that one of our web applications was timing out during searches. Since we are disconnected from the internet, we thought that setting the full text service property that forces signature verification to off (according to http://support.microsoft.com/kb/915850) might help. When this produced no improvement, we rebuilt the full text catalogs, which produced slightly better results, but we were still timing out for a fair number of the queries.

Upon further investigation I found that the query itself was running fine, it was just taking over 30 seconds, which is the default timeout for the SqlCommand.CommandTimeout property. In other words SQL was still running the query, but since it took longer than 30 seconds it automatically throws the error that the server has timed out or is unavailable, but really it’s the webpage itself that has caused the timeout.

An SQL query that takes over 30 seconds is indicative of inefficiency, especially considering the hardware we are running. Digging into the code, I found that a query was being constructed that searched the full text catalogs by using the sql CONTAINS clause sewn together by OR statements. So for each column in the fulltext catalog there was a separate CONTAINS statement, adding up to a grand total of 5. I narrowed this down to 3 CONTAINS statements by grouping columns from the same table into individual CONTAINS. It was still extremely slow. Then I broke out the query for each table, thinking I might give the user the ability to expand the search manually. When I did this I noticed that each query ran almost instantly (~0.01 sec).

So the solution was to run the query on each table individually, then use a UNION to combine the results! On the production database this took the query from 66.956 sec down to 0.116 sec. What a difference!

If you ever happen upon a forum where you get the advice to use an WHERE CONTAINS() OR CONTAINS() statement to query for the fulltext catalogs across multiple tables, make sure you set them straight!

Soybean Based Urinals

The company I work for, like so many others, is going green. The company is pushing bike to work initiatives, electric hand dryers instead of paper towels, and the latest waterless urinals. While I have no doubt the electric hand dryers are saving both the environment and corporate funds (employees often wipe their hands on their pants before being held a minute or more to use the electric hand dryers), the urinals got me thinking.

The waterless part is great right? Saving lots of water? Sure, but the urinals smell terrible. It would seem they are also nearly impossible to hit, as every waterless urinal seems to have urine all over the floor in front of it. Those things are downers, but don’t really matter to the company right? Well I’m not so sure. Whatever they spent on the trial green bathroom facility is certainly wasted; traffic to the bathroom seems almost negligible. But that wasn’t even what got me thinking.

The urinal claimed to be made out of 30% of a soy based resin. Well at first that made me happy. All of my friends know I hate soy based foods, and the idea that I was urinating on something partially soy based just made my day. However, I started to think back to the impact that corn subsidies which promoted creating ethanol based fuels had on worldwide food prices, and wondered what impact soy based urinals might have if they were to suddenly become increasingly popular. I don’t know if you remember the report, but the finding was summarized as follows:

“When all the costs and benefits are tallied, the government, taxpayers, and consumers together would lose $6.1-$7.2 billion or $1.61-$1.92 per additional gallon produced during the 1986-94 period if ethanol subsidies were increased enough to prompt the ethanol industry to produce 2 billion gallons in 1995. Conversely, if ethanol production falls to zero, they would save some $6.8-$8.9 billion, or $1.35-$1.76 per gallon not produced.”

Make no mistake, they aren’t making soy into just urinals, they are also making biodiesel from it. An increase in demand or government subsidies of the soy biodiesel production could create a similar cost to tax payers, or a worldwide swing in the price of soy. When the price of soy goes up, all kinds of crazy things happen. It would seem that the cost of soy is directly correlated with deforestation in the Amazon, as people scramble to clear forest to plant the bean. Though the markets are complicated, we know that as the price of food goes up, people starve. We saw this very clearly in 2008.

My conclusion: enjoy the smug satisfaction of your flushless urination and as they raze the Amazon rainforests and children in third world countries drop dead of starvation. Go Green!

Google-like Suggest Textbox

We all love the Google search real-time suggestions.

It is possible to implement a similar feature in your own web development using the asp.net ajax toolkit.

Inside the toolkit you will find the AutoCompleteExtender. Below I will detail how to get this difficult extender working.

Don’t bother looking at the documentation on www.asp.net, it is more or less worthless.

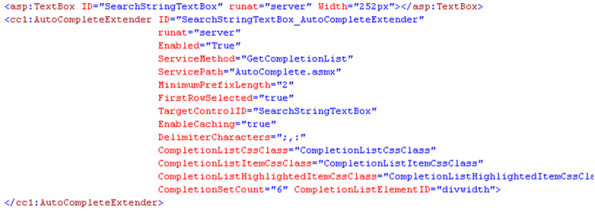

First, associate the autocompleteextender with your text box.

As you can see, you must specify a ServiceMethod and a ServicePath. This is where it gets tricky.

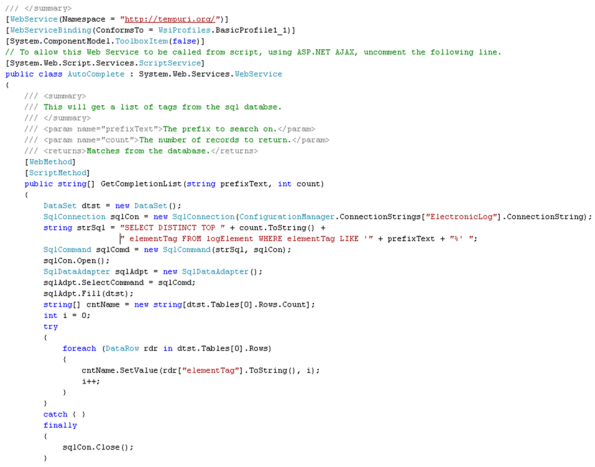

You must create a web service (I used an asmx file) which strictly adheres to calls from the autocompleteextender.

The service will look like the following:

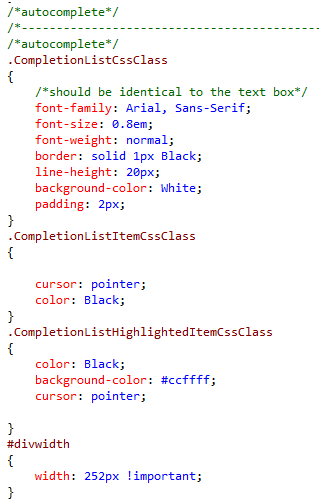

This should get the service up and running. Notice the query itself must strictly adhere to the number of records you want it to return. You can also play a few formatting tricks via CSS. Mine looks as follows:

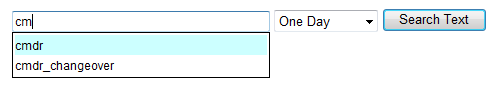

The final product looks as follows:

Some tinkering needs to take place to make the textbox size equal to the div size of the autocomplete box. This can be done in the css for the text box, making sure there are not extraneous borders or margins.

Using jQuery to call a Web Service in XSLT

Transforming XML into HTML or the like using XSL Transforms is a powerful tool. The biggest problem I faced when I began transforming certain XML tags into HTML controls is that the controls don’t do ANYTHING! Fortunately I figured out a way to use jQuery inside of the XSL transform to call an .asmx web service. I started by taking some XML, maybe something like:

<highlight>yellow</highlight>

In the XSLT when I find the <highlight> tag, you can do something like this:

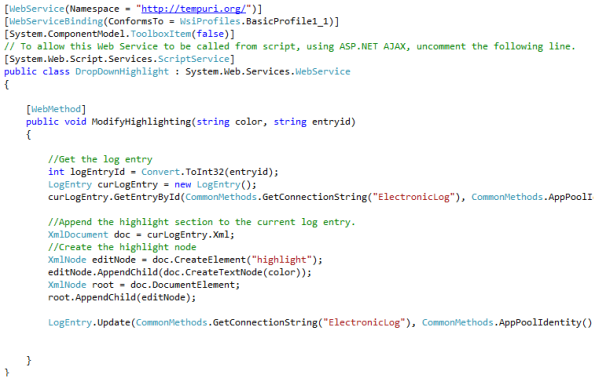

This gives you a drop down style box, with the appropriate option selected, of course there will be a few more xsl:when options, and a an otherwise option that acts as a try:finally. Now that you have a functionless drop down, its time to add the code behind that you want the drop down to execute on, say a change of selection. So make yourself a Web Service (.asmx) maybe something like the following:

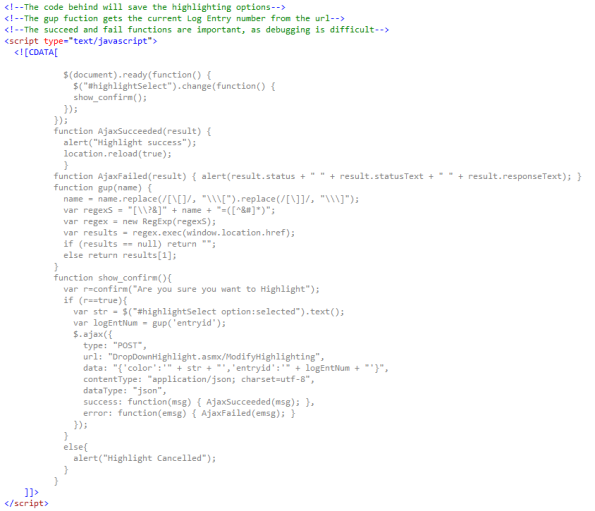

Now for the trick: calling this web service using jQuery. Of course, this jQuery must be in your XSLT, making it even more tricky to trouble shoot. I used the jQuery.ajax() function. It will looks something like this:

Now you have a fully function Control generated by XSLT. Enjoy!

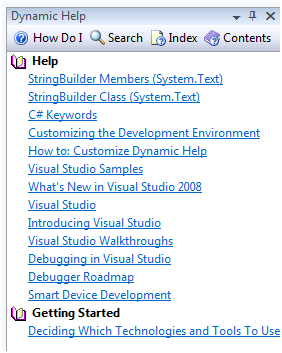

Dynamic Help Removed in VS 2010

If you are like me you love and cherish the dynamic help feature in Visual Studio. In fact, I never actually learned how to write code because this feature is so useful. I would just click on a class I had no idea how to use and up pops links to the msdn documentation, which probably holds the code I need to write already.

This feature has been removed from VS 2010. Though you are still free to locally install the msdn documentation, there is no dynamic feature. Today is a sad day.

Installation order for Visual Studio and SQL Server

If you plan on installing the full version of SQL server (instead of the express version) with Visual Studio, I prefer to install Visual Studio first, preferably without the express instance of SQL server.

If you’re doing a fresh install of VS 2008 and SQL 2008 on Windows 7, install in this order:

- VS 2008, WITHOUT SQL Server Express -> requires doing a ‘custom’ install

- VS 2008 SP1

- MSDN Library for VS 2008 SP1 (if you’re so inclined)

- SQL Server 2008

- SQL Server 2008 SP1

Comparing AD custom classes to AccountManagement

When developing code for an Intranet setting, where users must log in to be granted access to a client computer, it is often best practice to utilize Active Directory to obtain user information for your application. This saves administrators from having to maintain and update a separate user database in SQL or otherwise. Though this is an easy route to take to take while your network is small, it will become increasingly difficult to keep a separate user database as your user base grows.

Recently, I had to switch some existing web based applications from an SQL common user database onto active directory. To say the least, calls to the SQL user database were used liberally. Initially, I didn’t think anything of it, and simply switched the code in the data access layer of the application to get the info I wanted from AD instead of SQL queries.

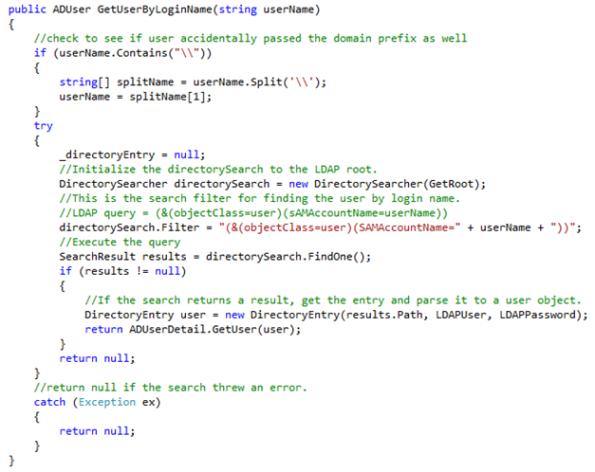

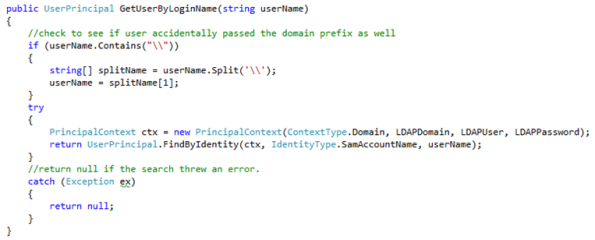

In testing, the application proved to be very slow, taking literally minutes to load each page. I had to assume that the problem existed within the LDAP queries to AD. I started to do some research and read that the classes I had written using the System.DirectoryServices namespace (had to be compatible back to ASP.Net 2.0) were now available in ASP.Net 3.5 in the System.DirectoryServices.AccountManagement namespace. I decided to set out to see if these new classes would be more efficient than my own hand written ones. I do have to say one thing; the code is much easier, no more clunky strings with LDAP queries. Code that once looked like this:

Now looks like this:

Though not terribly impressive for getting a user by login name, the ease of getting groups is much more pronounced over the older DirectorySearcher methods. Makes me wish I didn’t spend all the time figuring out the LDAP queries for these things, though many were available via web searches.

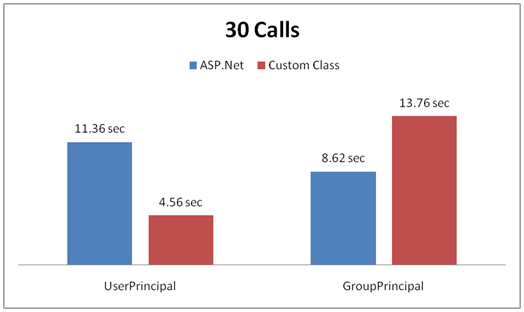

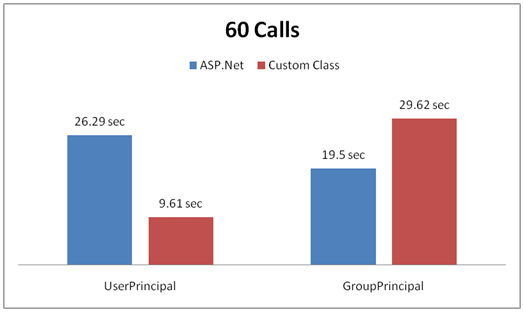

In order to see if the queries were going to be more efficient, I took advantage of an existing test harness I had prepared for my old ASP.Net 2.0 AD classes. I wrote a few new methods using the AccountManagement namespace that would return comparable information, such as the two get user by login methods. One test harness compared the UserPrincipal, the other compared the GroupPrincipal. A summary of the tests are bulleted below.

- Test harness was utilized to analyze time taken for multiple calls to active directory.

- For loops were utilized with both 30 and 60 calls to AD.

- Difference was taken between the time before the for loop and after the for loop to quantify the total time for the loop to execute.

- Comparison of my classes to those of the System.DirectoryServices.AccountManagement which is built into ASP.Net 3.5 & 4.0

The results are detailed in the charts below.

- My classes are 42-46% faster for returning the User

- My classes are 20-22% slower for returning the Group

- Either way, access to AD is incredibly slow and expensive!

The result is that weather I use my classes or the new AccountManagement classes, getting info from AD is slow. Though I have searched, I have been unable to find exactly why the queries are so slow. Making a long story even longer, I decided to go with the AccountManagement classes for 2 reasons. One, I am lazy and hopefully Microsoft will make the underlying classes faster with coming releases of the framework. Two, the GroupPrincipal is much faster than my own methods for retrieving group info, and the majority of what I do is checking to see if a user is in a group. I only have to get the user once, but I have to go get group membership info more regularly.

A partial solution to the inefficiency of these queries is to get the majority of the information you need all at once, and store it in session state. It seems that it is more efficient to go get a ton of data from AD and stash it away somewhere than to repeatedly go get little tidbits of data. I typically create a session wrapper class to keep my session variables strongly typed, but that is for another blog entry.